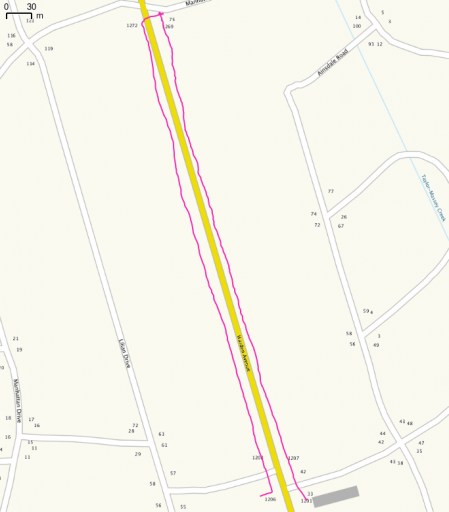

Took me nine minutes to legally cross Warden Avenue. I had to walk roughly 400 m to get to a crossing, then 400 m back down the street. Straight-line distance between the bus stop and my destination is about 40 m.

Would drivers put up with this?

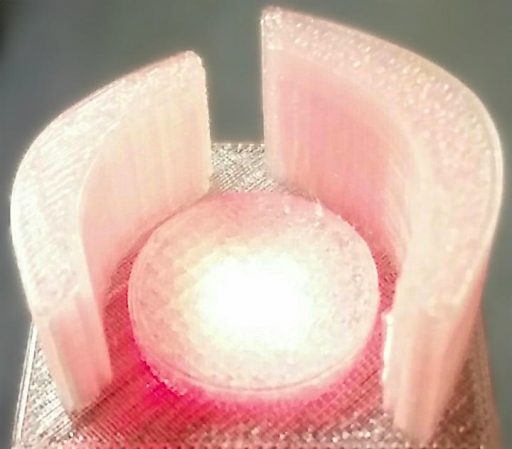

Based on Selasi Dorkenoo and Claus Rinner’s presentation at Toronto #Maptime – 3D Printing Demo. More later.

I’m talking about data and tools at Maptime Toronto tomorrow. Here are my slides: maptime201511-tools_and_data.

Generated using ptrv/gpx2spatialite, rendered in QGIS as lines with 75% transparency.

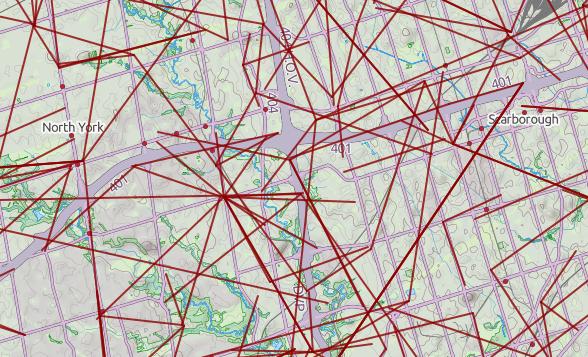

@MaptimeTO asked me to summarize the brief talk I gave last week at Maptime Toronto on making maps from the Technical and Administrative Frequency List (TAFL) radio database. It was mostly taken from posts on this blog, but here goes:

@MaptimeTO asked me to summarize the brief talk I gave last week at Maptime Toronto on making maps from the Technical and Administrative Frequency List (TAFL) radio database. It was mostly taken from posts on this blog, but here goes:

It was Doors Open Toronto last weekend, and the city published the locations as open data: Doors Open Toronto 2013. I thought I’d try to geocode it after Richard suggested we take a look. OpenStreetMap has the Nominatim geocoder, which you can use freely as long as you accept restrictions on bulk queries.

As a good and lazy programmer, I first tried to find pre-built modules. Mistake #1; they weren’t up to snuff:

So I rolled my own, using nowt but the Nominatim Search Service Developer’s Guide, and good old simple modules like URI::Escape, LWP::Simple, and JSON::XS. Much to my surprise, it worked!

Much as I love XML, it’s a bit hard to read as a human, so I smashed the Doors Open data down to simple pipe-separated text: dot.txt. Here’s my code, ever so slightly specialized for searching in Toronto:

#!/usr/bin/perl -w

# geonom.pl - geocode pipe-separated addresses with nominatim

# created by scruss on 02013/05/28

use strict;

use URI::Escape;

use LWP::Simple;

use JSON::XS;

# the URL for OpenMapQuest's Nominatim service

use constant BASEURI => 'http://open.mapquestapi.com/nominatim/v1/search.php';

# read pipe-separated values from stdin

# two fields: Site Name, Street Address

while (<>) {

chomp;

my ( $name, $address ) = split( '\|', $_, 2 );

my %query_hash = (

format => 'json',

street => cleanaddress($address), # decruft address a bit

# You'll want to change these ...

city => 'Toronto', # fixme

state => 'ON', # fixme

country => 'Canada', # fixme

addressdetails => 0, # just basic results

limit => 1, # only want first result

# it's considered polite to put your e-mail address in to the query

# just so the server admins can get in touch with you

email => 'me@mydomain.com', # fixme

# limit the results to a box (quite a bit) bigger than Toronto

bounded => 1,

viewbox => '-81.0,45.0,-77.0,41.0' # left,top,right,bottom - fixme

);

# get the result from Nominatim, and decode it to a hashref

my $json = get( join( '?', BASEURI, escape_hash(%query_hash) ) );

my $result = decode_json($json);

if ( scalar(@$result) > 0 ) { # if there is a result

print join(

'|', # print result as pipe separated values

$name, $address,

$result->[0]->{lat},

$result->[0]->{lon},

$result->[0]->{display_name}

),

"\n";

}

else { # no result; just echo input

print join( '|', $name, $address ), "\n";

}

}

exit;

sub escape_hash {

# turn a hash into escaped string key1=val1&key2=val2...

my %hash = @_;

my @pairs;

for my $key ( keys %hash ) {

push @pairs, join( "=", map { uri_escape($_) } $key, $hash{$key} );

}

return join( "&", @pairs );

}

sub cleanaddress {

# try to clean up street addresses a bit

# doesn't understand proper 'Unit-Number' Canadian addresses tho.

my $_ = shift;

s/Unit.*//; # shouldn't affect result

s/Floor.*//; # won't affect result

s/\s+/ /g; # remove extraneous whitespace

s/ $//;

s/^ //;

return $_;

}

It quickly became apparent that the addresses had been entered by hand, and weren’t going to geocode neatly. Here are some examples of the bad ones:

Curiously, some (like the address for Black Creek Pioneer Village) were right, but just not found. Since the source was open data, I put the right address into OpenStreetMap, so for next year, typos aside, we should be able to find more events.

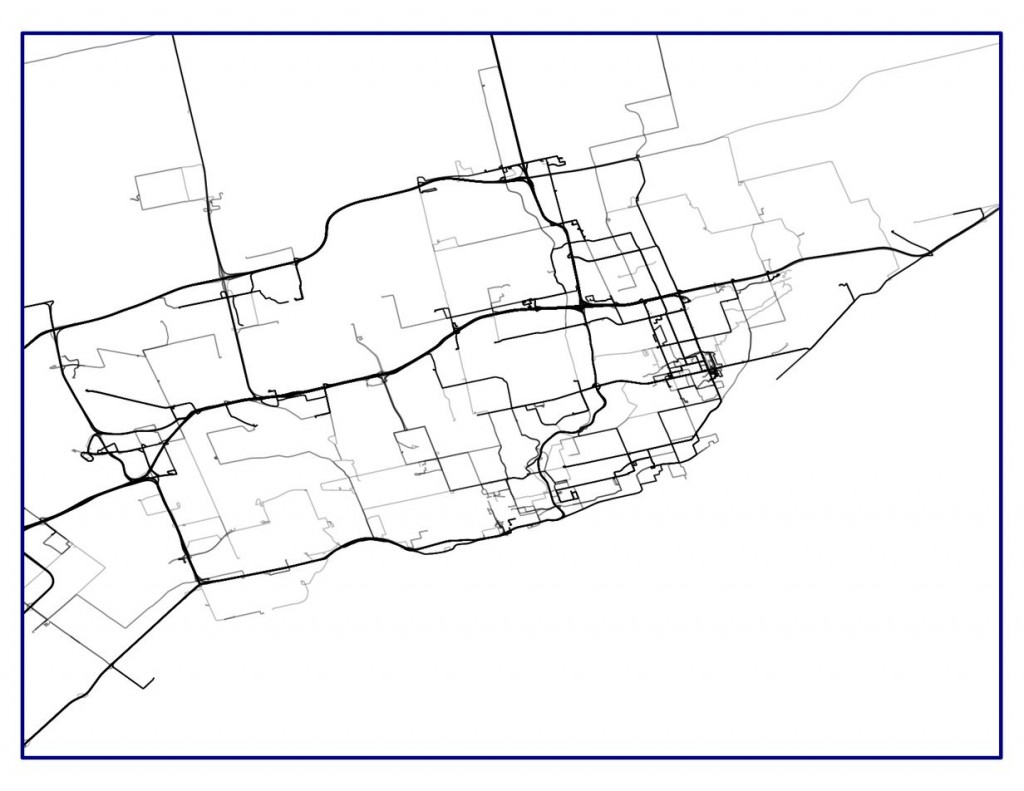

Now, how accurate were the results? Well, you decide:

Looks like the data sets at toronto.ca/open might finally actually be open; that is, usable in a way that doesn’t bind subsequent users to impossible terms. The new licence (which unfortunately is behind a squirrelly link) basically just requires you to put a reference to this paragraph somewhere near your data/application/whatever:

Contains public sector Datasets made available under the City of Toronto’s Open Data Licence v2.0.

and a link to the licence, where possible.

Gone are the revocation clauses, which really prevented any open use before, because they would require you to track down all the subsequent users of the data and get them to stop. Good. I think we can now use the data in OpenStreetMap.

While commenting on the licence’s squirrelly URL — I mean, could you remember http://www1.toronto.ca/wps/portal/open_data/open_data_fact_sheet_details?vgnextoid=59986aa8cc819210VgnVCM10000067d60f89RCRD? — I stumbled upon the comedy gold that is the City of Toronto Comments Wall log. There goes my planned reading for the day.

… or “トロント動物園“, as we locals purportedly call it. The This place has unverified edits legend is a bit of a giveaway. Looks like there’s been some messing about with Google Map Maker, which isn’t always the best tool for the job.

… or “トロント動物園“, as we locals purportedly call it. The This place has unverified edits legend is a bit of a giveaway. Looks like there’s been some messing about with Google Map Maker, which isn’t always the best tool for the job.

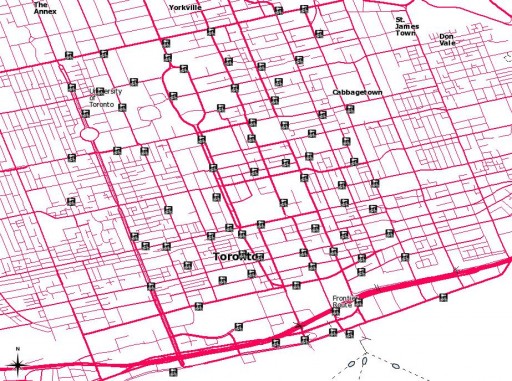

BIXI Toronto is (nearly) here!

Here are the proposed station locations: Bixi_Toronto_shp.zip (Shapefile) or Bixi_Toronto-kml_csv.zip (KML and CSV).

Here are the proposed station locations: Bixi_Toronto_shp.zip (Shapefile) or Bixi_Toronto-kml_csv.zip (KML and CSV).

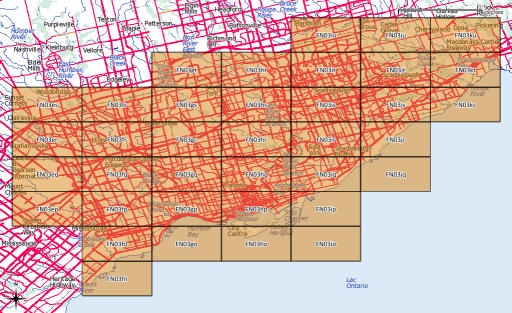

After yesterday’s post, I went a bit nuts with working out the whole amateur radio grid locator thing (not that I’m currently likely to use it, though). I’d hoped to provide a shapefile of the entire world, but that would be too big for the format’s 2GB file size limit.

What I can give you, though, is:

If anyone would like their grid square in Google Earth format, let me know, or read on …

Several people have asked, so here’s how you convert to KML. You’ll need the OGR toolkit installed, which comes in several open-source geo software bundles: FWTools/osgeo4w/QGis. Let’s assume we want to make the grid square ‘EN’.

make_grid.pl en

ogr2ogr -f KML EN-maidenhead_grid.kml EN-maidenhead_grid.shp

ogr2ogr -f KML -where "Square='82'" EN82-maidenhead_grid.kml EN-maidenhead_grid.shp