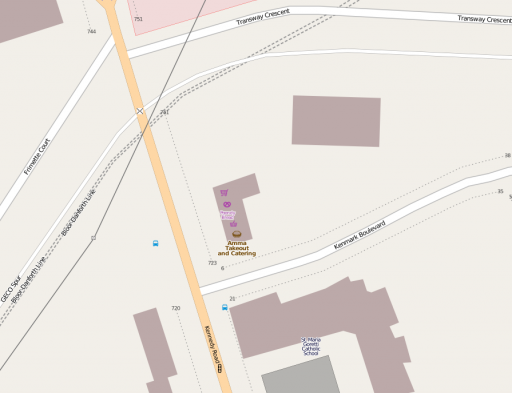

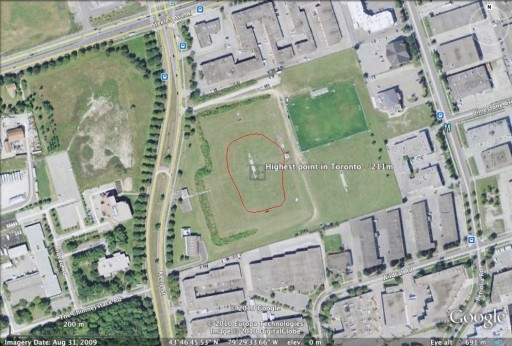

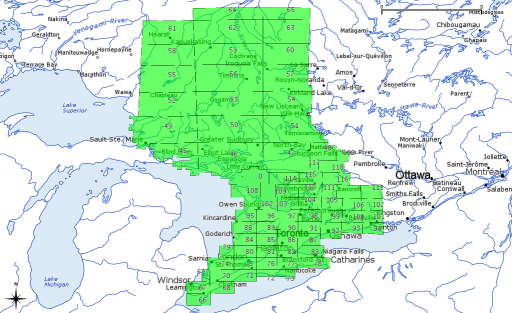

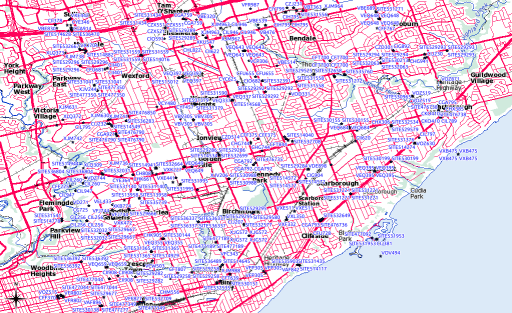

Industry Canada publishes the locations of all licensed radio spectrum users on Spectrum Direct. You can find all the transmitters/receivers near you by using its Geographical Area Search. And there are a lot near me:

While Spectrum Direct’s a great service, it has three major usability strikes against it:

While Spectrum Direct’s a great service, it has three major usability strikes against it:

- You can’t search by address or postal code; you need to know your latitude and longitude. Not just that, it expects your coordinates as a integer of the format DDMMSS.

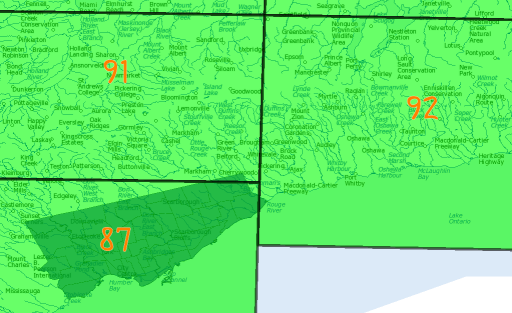

- It’s very easy to overwhelm the system. Where I live, I can pretty much search for only 5km around me before the system times out.

- The output formats aren’t very useful. You can either get massively verbose XML, or very long line undelimited text, and neither of these are very easy to work with.

Never fear, Perl is here! I wrote a tiny script that glues together Dave O’Neill‘s Parse::SpectrumDirect::RadioFrequency module (which I wonder if you can guess what it does?) to Robbie Bow‘s Text::CSV::Slurp module. The latter is used to blort out the former’s results to a CSV file that you can load into any GIS/mapping system.

Here’s the code:

#!/usr/bin/perl -w

# spectest.pl - generate CSV from Industry Canada Spectrum Direct data

# created by scruss on 02010/10/29 - for https://glaikit.org/

# usage: spectest.pl geographical_area.txt > outfile.csv

use strict;

use Parse::SpectrumDirect::RadioFrequency;

use Text::CSV::Slurp;

use constant MINLAT => 40.0; # all of Canada is >40 deg N, for checking

my $prefetched_output = '';

# get the whole file as a string

while (<>) {

$prefetched_output .= $_;

}

my $parser = Parse::SpectrumDirect::RadioFrequency->new();

# magically parse Spectrum Direct file

$parser->parse($prefetched_output) or die "$!\n";

my $legend_hash = $parser->get_legend(); # get column descriptions

my @keys = ();

foreach (@$legend_hash) {

# retrieve column keys in order so the output will resemble input

push @keys, $_->{key};

}

# get the data in a ref to an array of hashes

my $stations = $parser->get_stations();

my @good_stations = ();

# clean out bad values

foreach (@$stations) {

next if ( $_->{Latitude} < MINLAT );

push @good_stations, $_;

}

# create csv file in memory then print it

my $csv = Text::CSV::Slurp->create(

input => \@good_stations,

field_order => \@keys

);

print $csv;

exit;

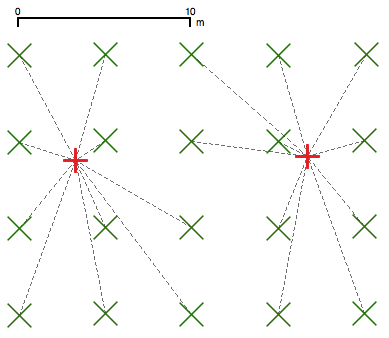

The results aren’t perfect; QGis boaked on a file it made where one of the records appeared to have line breaks in it. It could filter out multiple pieces of equipment at the same call sign location. But it works, mostly, which is good enough for me.